| doc | ||

| infrastructure/c4k-website-build | ||

| public | ||

| src | ||

| .gitignore | ||

| .gitlab-ci.yml | ||

| LICENSE | ||

| package.json | ||

| project.clj | ||

| README.md | ||

| shadow-cljs.edn | ||

convention 4 kubernetes: c4k-taiga

chat over e-mail |

team@social.meissa-gmbh.de | taiga & Blog

Configuration Issues

We currently can no login even after python manage.py createsuperuser --noinput in the taiga-back-deployment container. What might help: https://docs.taiga.io/setup-production.html#taiga-back

Note: taiga-manage,-back und -async verwenden die gleichen docker images mit unterschiedlichen entry-points.

https://github.com/kaleidos-ventures/taiga-docker https://community.taiga.io/t/taiga-30min-setup/170

Steps to start and get an admin user

Philosophy: First create the superuser, then populate the DB. https://docs.taiga.io/setup-production.html#taiga-back https://docs.taiga.io/setup-production.html#_configure_an_admin_user https://github.com/kaleidos-ventures/taiga-back/blob/main/docker/entrypoint.sh

In the init container we create the super user. Difference between init-container and container: CELERY_ENABLED: false The init container gets the following command and args:

command: ["/bin/bash"]

args: ["-c", "source /opt/venv/bin/activate && python manage.py createsuperuser --noinput"]

Thus the dockerfile default entrypoint is ignored.

Option 1: Init container, currently under test

Create an init container (celery disabled) with the python manage.py command and the taiga-manage createsuperuser args

Option 2: Single container

Create a single container that has celery disabled at the beginning. Runs the following cmds:

- python manage.py taiga-manage createsuperuser

- enable celery

- execute entrypoint.sh

HTTPS

Terminiert am ingress. Wie interagiert das mit taiga? Eventuell wird dies hier relevant: https://github.com/kaleidos-ventures/taiga-docker#session-cookies-in-django-admin

Docker Compose (DC) -> Kubernetes

We implemented a deployment and service in kubernetes for each DC Service. Configmaps and secrets were implemented, to avoid redundancy and readability also to increase security a bit. For all volumes described in DC we implemented PVCs and volume refs.

A config.py (used for taiga-back ) was introduced for reference. A config.json (used for taiga-front ) was introduced for reference. NB: It might be necessary to actually map both from a config map to their respective locations in taiga-back and taiga-front. Description for that is here. A mix of both env-vars and config.py in one container is not possible.

depends_on

We currently assume, that it will work without explicitly defining a startup order.

DC Networking

https://github.com/compose-spec/compose-spec/blob/master/spec.md

The hostname KW sets the hostname of a container.

It should have no effect on the discoverability of the container in kubernetes.

The networks KW defines the networks that service containers are attached to, referencing entries under the top-level networks key.

This should be taken care of by our kubernetes installation.

Pod to Pod Possible Communications

Taiga containers that need to reach other taiga containers: taiga-async -> taiga-async-rabbitmq taiga-events -> taiga-events-rabbitmq This is not quite clear, but probably solved with the implementation of services.

Deployments

Separate deployments exist for each of the taiga modules:

Taiga-back reads many values in config.py from env vars as can be seen in the taiga-back config.py. These are read from configmaps and secrets in the deployment.

Purpose

Easily generate a config for a small scale Taiga deployment. Complete with initial super user and configurable values for flexibility.

Status

WIP. We still need to implement the backup solution for full functionality.

Try out

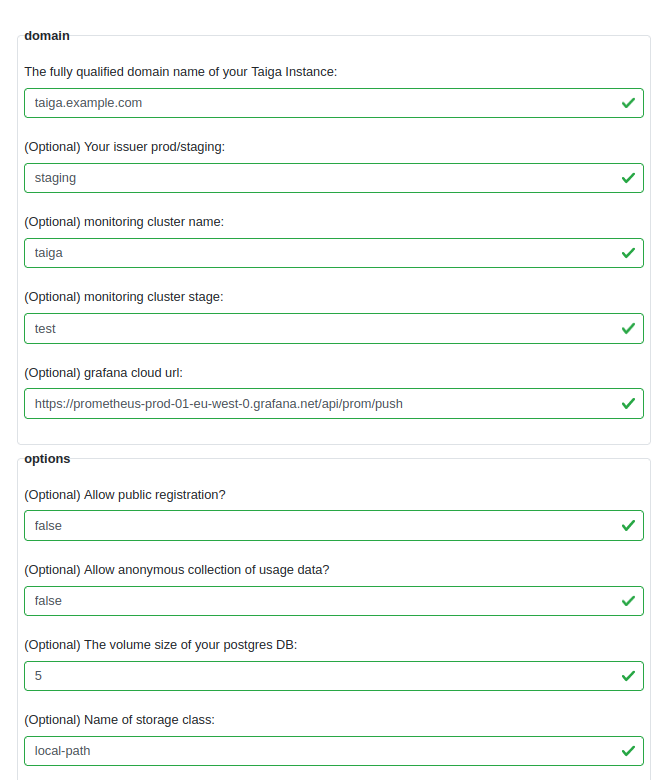

Click on the image to try out live in your browser:

Your input will stay in your browser. No server interaction is required.

Usage

You need:

- A working DNS route to the FQDN of your taiga installation

- A kubernetes cluster provisioned by provs

- The .yaml file generated by c4k-taiga

Apply this file on your cluster with kubectl apply -f yourApp.yaml.

Done.

Development & mirrors

Development happens at: https://repo.prod.meissa.de/meissa/c4k-taiga

Mirrors are:

- https://gitlab.com/domaindrivenarchitecture/c4k-taiga (issues and PR, CI)

- https://github.com/DomainDrivenArchitecture/c4k-taiga

For more details about our repository model see: https://repo.prod.meissa.de/meissa/federate-your-repos

License

Copyright © 2022 meissa GmbH Licensed under the Apache License, Version 2.0 (the "License") Pls. find licenses of our subcomponents here